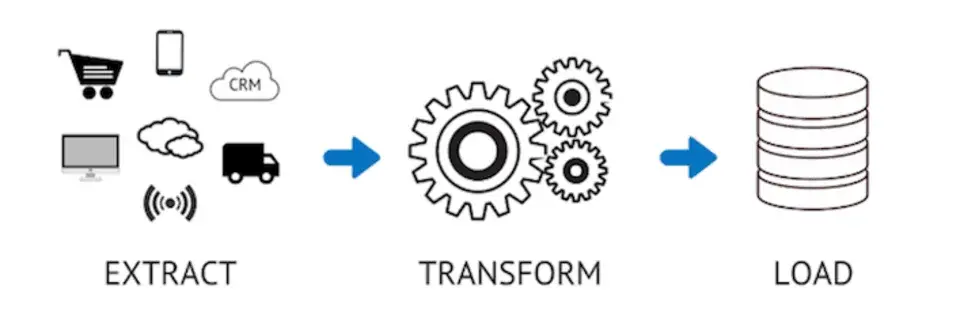

ETL stands for Extract, Transform and Load. It’s a data integration process in which data is firstly acquired from one or multiple data sources, then changed or processed and is finally loaded into the data warehouse, databases, and other files such as PDF, Excel or other target systems.

The easiest way to understand how ETL works is to understand what happens in each step of the process:

Extract: During data extraction, raw data is copied or exported from the source location(s) to a staging area. Data can be extracted from a variety of data sources, which can be structured or unstructured. Those sources include but are not limited to: SQL or NonSQL servers, CRM, flat files, email and web pages. Although extraction can be done manually with a team of data engineers, hand-coded data extraction can be time-intensive and prone to errors. ETL tools automate the extraction process and create a more efficient and reliable workflow.

Transform: In the staging area, the raw data undergoes data processing. Here, the data is transformed and consolidated for its intended analytical use case. This includes processes such as: cleaning, filtering, validating and authenticating data, providing calculations or summary based on the raw data, conducting audits to ensure data quality, encrypting and protecting data quality, formatting the data into tables or joining tables to match the schema of the target data warehouse.

Load: In this last step, the transformed data is moved from the staging area into a target data warehouse. Typically, this involves an initial loading of all data, followed by periodic loading of incremental data changes and, less often, full refreshes to erase and replace data in the warehouse. For most organizations that use ETL, the process is automated, well-defined, continuous and batch-driven. Typically, ETL takes place during off-hours when traffic on the source systems and the data warehouse is at its lowest.

Image credit: DataDrivenInvestor, 2019

ETL Tools

In the past, organizations wrote their own ETL code. There are now many open-source and commercial ETL tools and cloud services to choose from. These tools offer a visual, drag-and-drop interface, support for complex data management, security and compliance.

Although there are multiple ETL tools, not all are built for the modern data environment. Organizations need tools that are flexible and quick enough for the pace of business today. Ideally, they should also support a variety of use cases. Some of the ETL tools used throughout the data landscape today include:

Incumbent or legacy ETL tools: These tools still provide core data integration functionality, but are slower, more brittle, and less flexible than contemporary options. Many of these tools are code intensive and lack the automation (especially for real-time deployments) compared to the others.

Open-source ETL tools: Open-source ETL tools are a lot more adaptable than legacy tools. They work on data of varying structures and formats — legacy tools basically work only on structured data. The open-source nature of these tools makes them faster than most legacy tools.

Cloud-based ETL tools: Cloud-based ETL tools make data readily available, and are flexible enough to account for the different structures associated with big data. Because of this flexibility, cloud-based ETL tools are more effective than on-premises options for dealing with hybrid cloud data sources.

Why You Need an ETL Tool

One of the main reasons is these tools automate and streamline data pipeline processes. Reputable ETL tools reduce the time spent on manual processes such as writing code and mapping source data to target systems. They make these tasks easily repeatable, cost-effective, and faster.

Additionally, ETL tools are the best means of handling complex data management tasks. Widespread adoption of the cloud means data sources are more distributed than they were, while real-time data means that the speed of analytics must increase. Cloud ETL tools can meet all these demands so organizations aren’t struggling to keep up.

Furthermore, ETL tools are a must for data governance demands. Regulations like GDPR hold organizations accountable for ensuring digital privacy. Using ETL tools with standardized, repeatable data governance processes helps to ensure data governance needs are met to fulfil this and other regulations.

Finally, ETL tools are also key for implementing data quality; ensuring data is both trustworthy and accurate. These instruments facilitate data quality and data governance at enterprise scale (Talend Blog)

What are some other benefits of ETL tools?

External Links

- https://medium.datadriveninvestor.com/understanding-extract-transform-and-load-etl-and-its-necessity-in-data-analytics-world-with-an-64346016153d

- https://www.talend.com/resources/what-is-etl/

- https://www.ibm.com/topics/etl